Featured Content

Enhancing BI with AI-Driven Data Analysis and ChatGPT Power Query Integration

Navigating the Next Wave: How AI-Driven Data Analysis and ChatGPT Power Query Integration are Setting New Standards in Business Intelligence and Data Strategy

In today’s data-driven environment, integrating AI-driven data analysis, mainly through ChatGPT and Power Query integration, can significantly enhance our ability to analyse and interpret complex datasets.

One such innovative approach involves combining the capabilities of Power Query, a data connection technology, with OpenAI’s powerful AI models. This blog post will explore a practical example of enhancing BI through AI-driven data analysis, highlighting how ChatGPT and Power Query integration are crucial in preprocessing and analysing data.

The Approach: A Step-by-Step Guide

Our method involves several steps, starting with loading data into Power Query, preprocessing it, and finally, analysing it with an AI model to infer gender based on names. Below you will find the full code.

Analyse data with ChatGPT - complete implementation code

Detailed walkthrough

1. Loading and Preparing Data

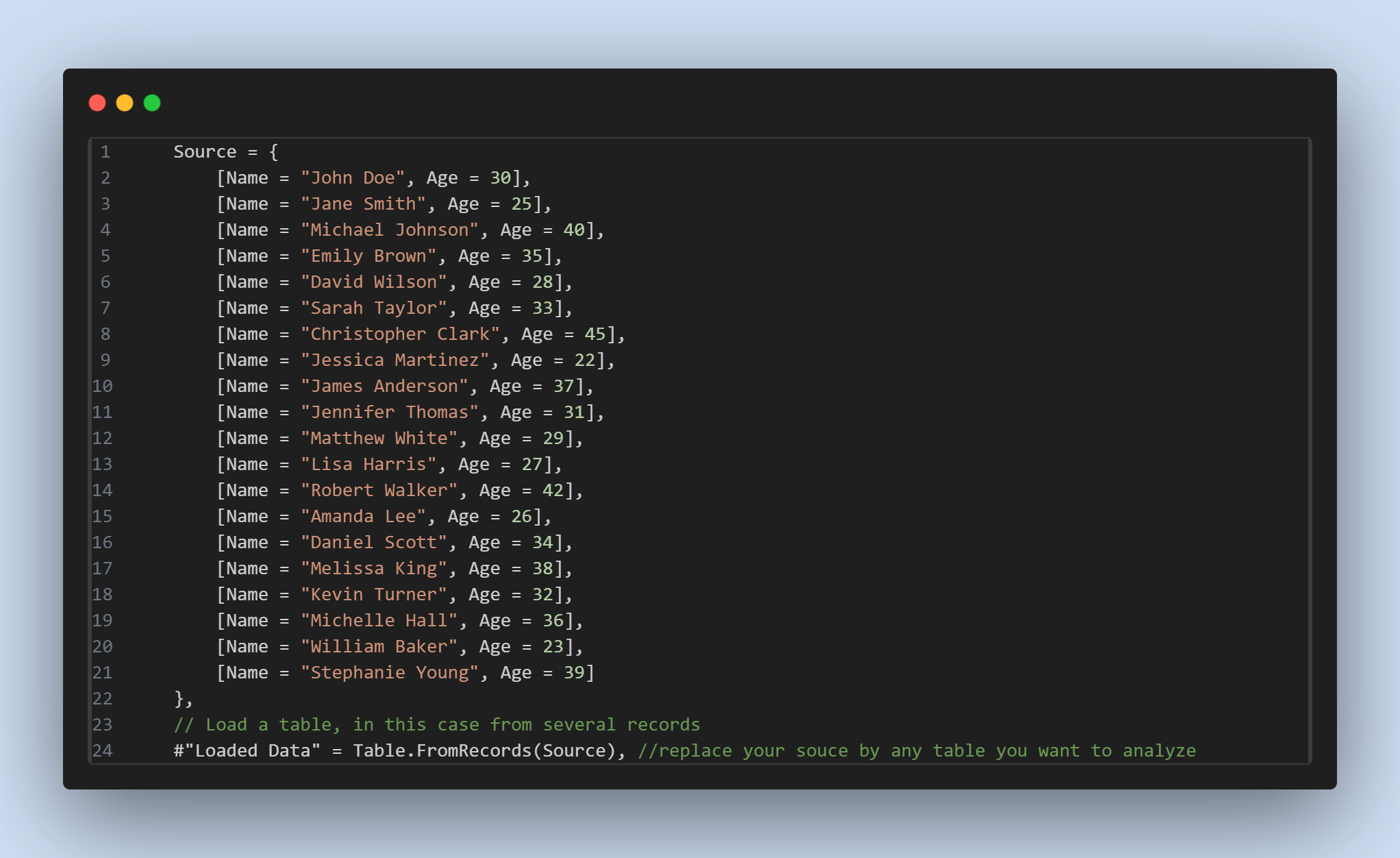

The process begins with loading data into Power Query. For this example, we use a simple dataset of names and ages. This dataset could easily represent a subset of a more extensive database in a real-world scenario.

Power Query code that loads a table from records.

Power Query code that loads a table from records.

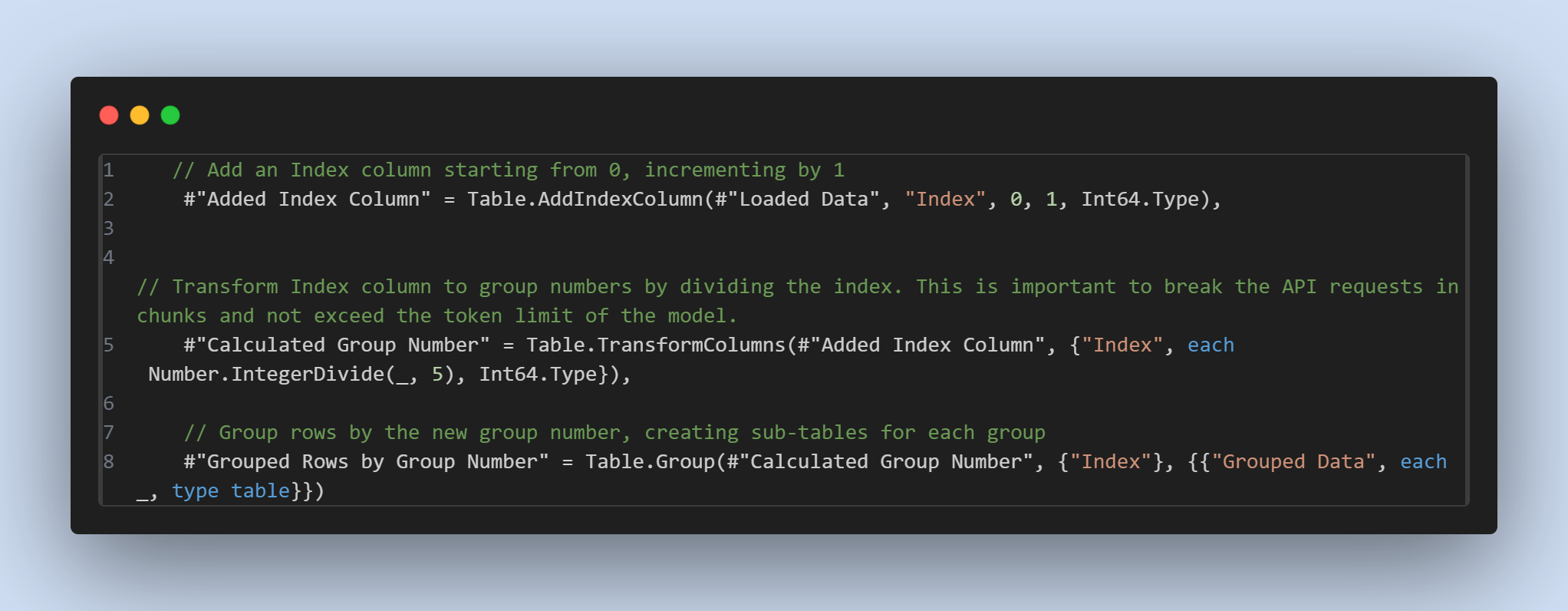

2. Indexing and Grouping

After loading the data, we add an index column to assist in grouping the data. This step is crucial for managing API request sizes later on. Specifically, we divide the dataset into smaller chunks to ensure each API request stays within the model’s token limit. This division is achieved by calculating a group number based on the index.

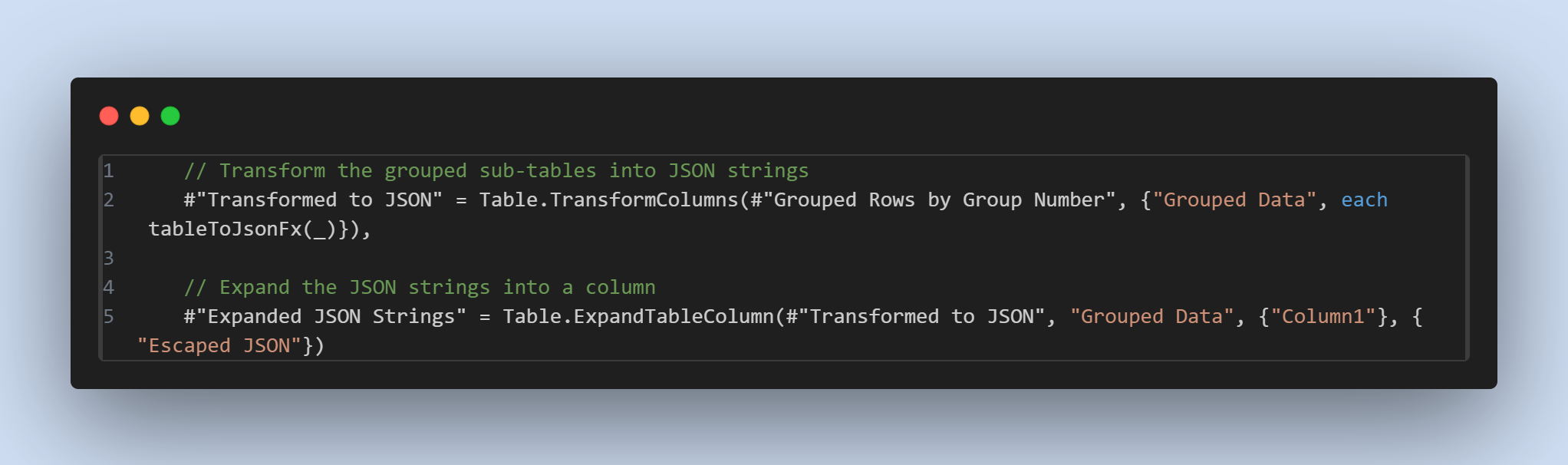

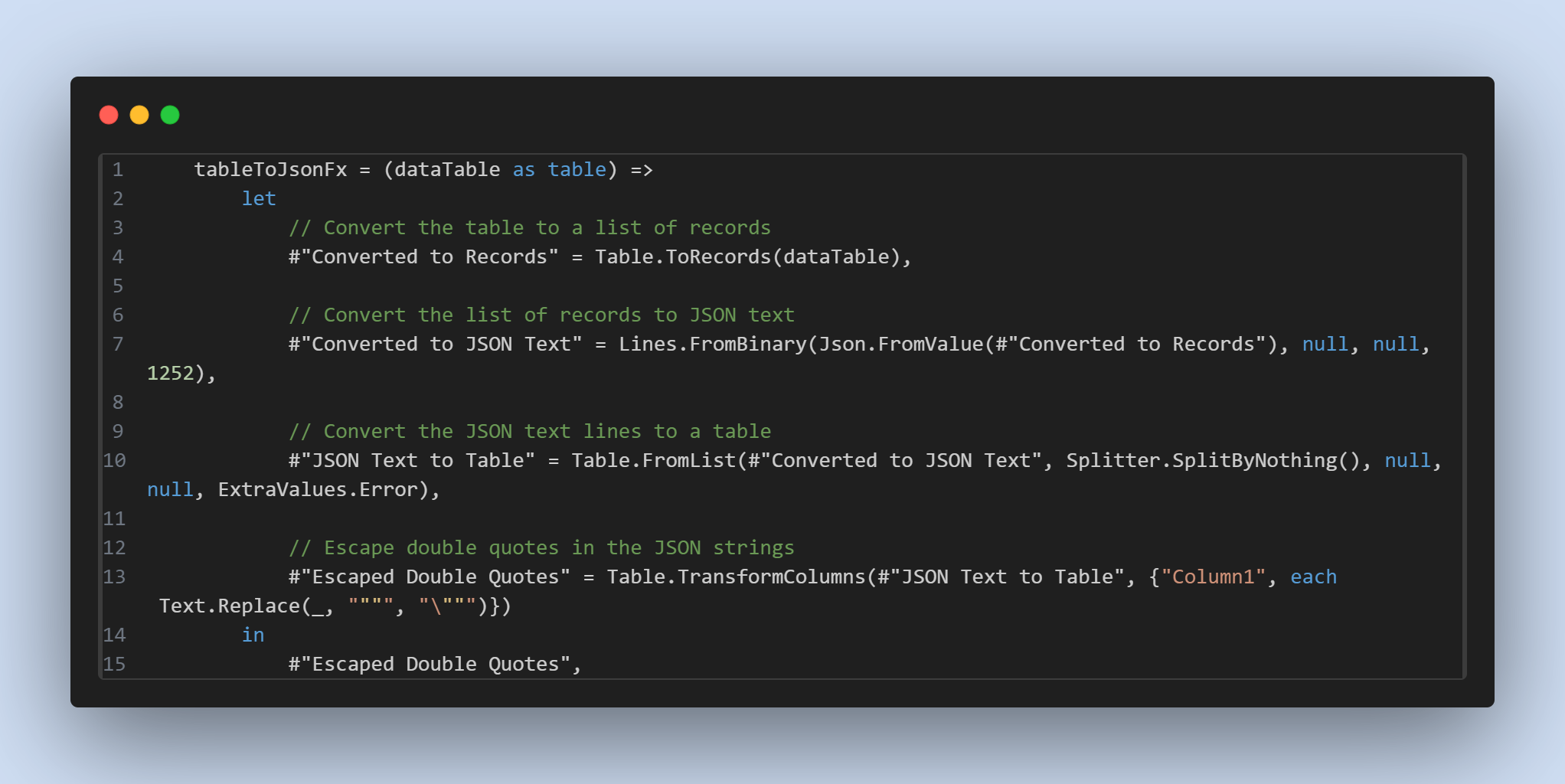

3. Converting to JSON

The next step involves transforming the grouped data into JSON format. This transformation is necessary because it is easier to send data in JSON format to OpenAI API. To accomplish this, we use a function that first convert each data group into a list of records, then into JSON text, and finally back into a table format with escaped double quotes.

Section of the code where we use the function to convert data into JSON strings

Section of the code where we use the function to convert data into JSON strings

Power Query function that converts the data into JSON strings

Power Query function that converts the data into JSON strings

4. Analysing Data with OpenAI

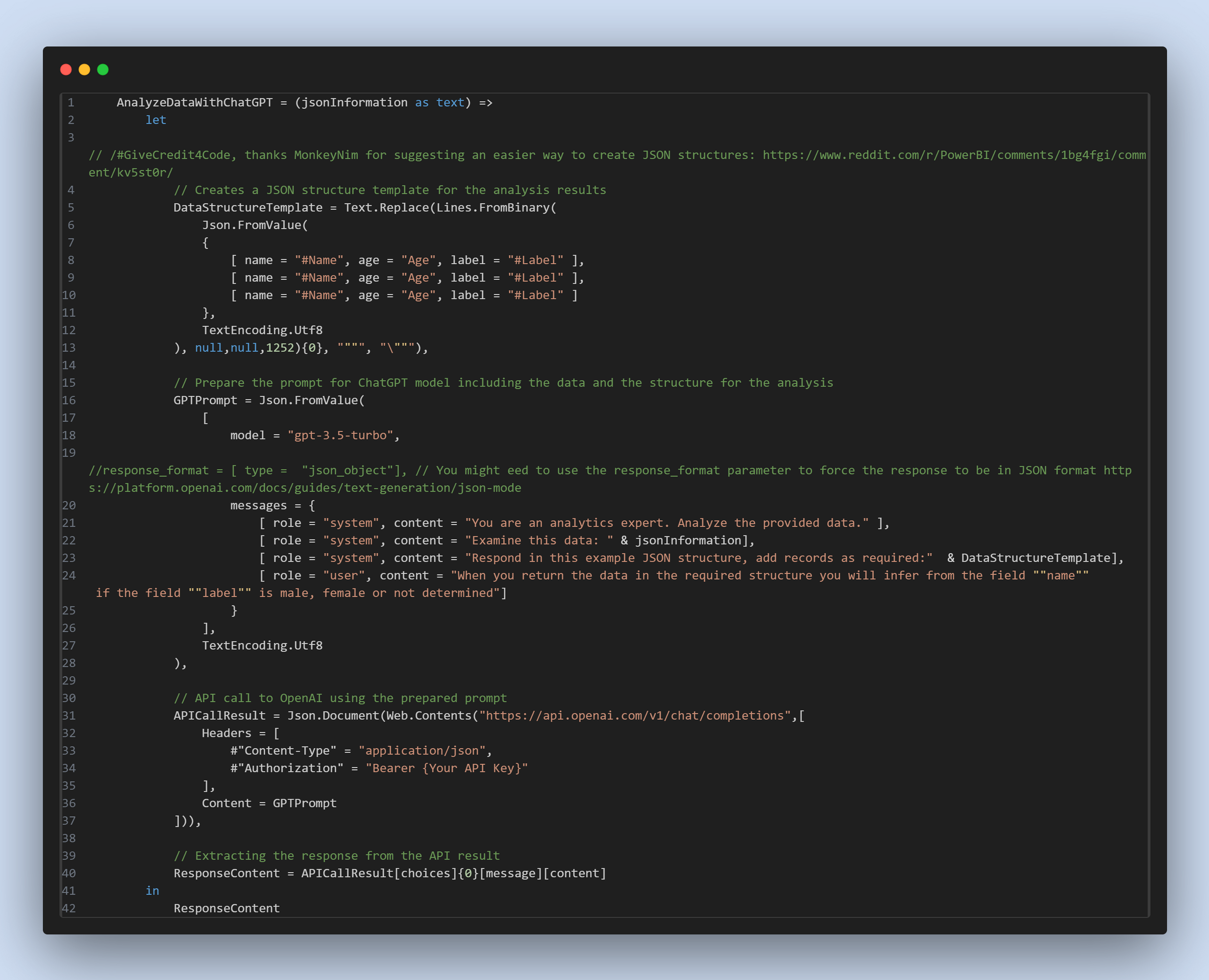

With our data now in JSON format, we can use OpenAI’s API to analyse it. In this example, we’re using OpenAI’s “gpt-3.5-turbo” model to predict the gender based on names. The analysis involves sending the JSON data to the API, which returns predictions in JSON format. This step is encapsulated in a custom function within Power Query that handles the API requests and response parsing.

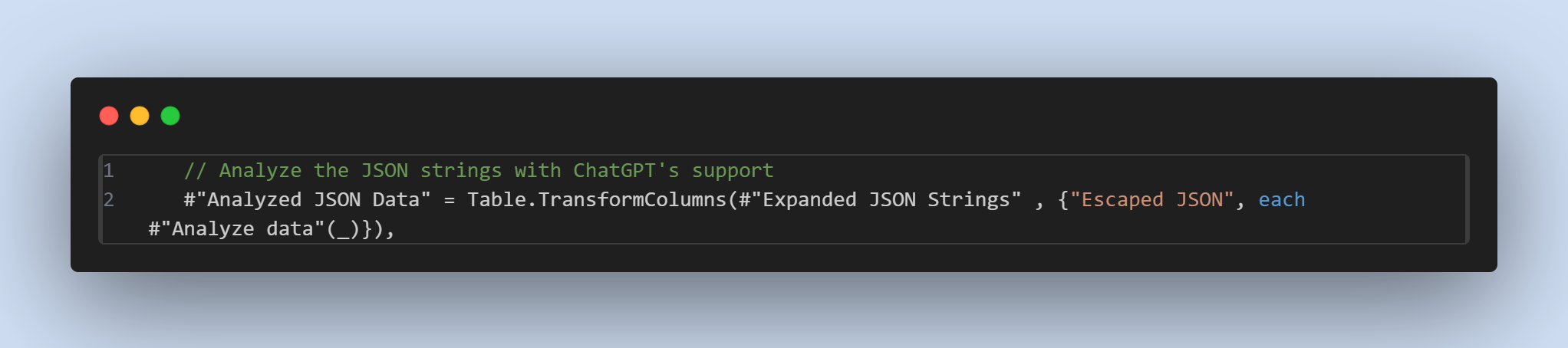

Section of the code where we use the function to analyse data with ChatGPT.

Section of the code where we use the function to analyse data with ChatGPT.

Power Query fuction that sends data to ChatGPT to analyze..

Power Query fuction that sends data to ChatGPT to analyze..

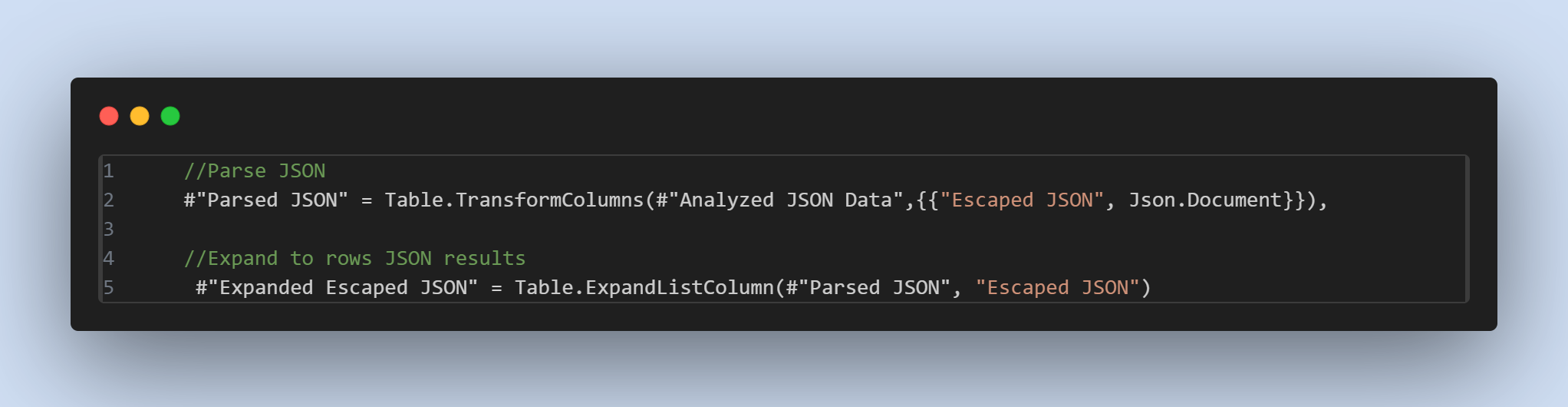

5. Post-analysis Processing

After receiving the analysed data from the API, we need to parse the JSON responses back into a tabular format. This conversion allows us to integrate the AI-inferred information (in this case, gender labels) into our original dataset.

Cleaning up things.

Cleaning up things.

Real-world Implications and Use Cases

The future of data processing is here, as AI-driven data analysis ChatGPT Power Query integration unlocks unprecedented possibilities for data analysis and enrichment in the BI landscape. By automating the inference of information that would otherwise require manual research or assumptions (e.g., gender based on names), analysts can save time and increase the accuracy of their datasets.

This method can be particularly beneficial when:

- Demographic Analysis: When demographic information is incomplete or needs to be inferred for marketing, research, or reporting purposes

- Data Enrichment: Enhancing existing datasets with additional insights without manual data entry

- Customer Segmentation: Automatically categorizing customers based on available information

- Market Research: Inferring characteristics for better audience understanding

- Compliance and Reporting: Meeting reporting requirements that need demographic breakdowns

Challenges and Considerations

While this approach offers significant advantages, there are important challenges to consider:

Technical Challenges:

- API Rate Limits: Managing the frequency of API calls to avoid hitting OpenAI’s rate limits

- Data Volume: Processing large datasets efficiently while respecting API constraints

- Error Handling: Implementing robust error handling for API failures or unexpected responses

Privacy and Security:

- Data Privacy: Managing data privacy when sending information to external services

- Sensitive Information: Ensuring no sensitive or personally identifiable information is unnecessarily exposed

- Compliance: Meeting GDPR, CCPA, and other data protection regulations

Accuracy and Ethics:

- AI Prediction Accuracy: Validating and reviewing AI-generated predictions to ensure they meet required standards

- Bias Considerations: Understanding potential biases in AI models and their impact on results

- Ethical Use: Ensuring the ethical application of AI-inferred demographic data

Cost Management:

- API Costs: Monitoring and controlling costs associated with API usage

- Resource Optimization: Balancing accuracy with computational and financial resources

Best Practices for Implementation

When implementing AI-driven data analysis in your BI workflows, consider these best practices:

Data Preparation:

- Clean and validate your data before sending it to AI services

- Implement data sampling for large datasets to control costs

- Use batch processing to optimize API calls

Security:

- Store API keys securely using environment variables or key vaults

- Implement proper authentication and authorization

- Monitor API usage and access logs

Quality Assurance:

- Implement validation checks on AI-generated results

- Maintain human oversight for critical decisions

- Document your AI integration process for auditing

Performance:

- Cache results where appropriate to avoid redundant API calls

- Implement retry mechanisms for failed requests

- Monitor performance metrics and optimize accordingly

Conclusion

The synergy between Power Query and OpenAI demonstrates the powerful potential of combining traditional data processing tools with cutting-edge AI technologies. By leveraging this approach, data analysts and scientists can enhance their workflows, achieve more accurate insights, and unlock new possibilities in data analysis.

As AI technologies evolve, their integration into data processing and analysis workflows will undoubtedly become more prevalent, opening new horizons for data-driven decision-making. The key is to implement these solutions thoughtfully, considering both the tremendous opportunities and the important challenges they present.

Key Takeaways:

- AI integration can significantly enhance traditional BI workflows

- Proper planning and implementation are crucial for success

- Privacy, security, and ethical considerations must be prioritized

- The combination of Power Query and AI opens new possibilities for data enrichment

- Continuous validation and monitoring ensure reliable results

This integration represents just the beginning of what’s possible when we combine the robust data transformation capabilities of Power Query with the analytical power of AI. As these technologies continue to mature, we can expect even more sophisticated and seamless integrations that will further revolutionize how we approach data analysis in Business Intelligence.

Comments

Share your take or ask a question below.